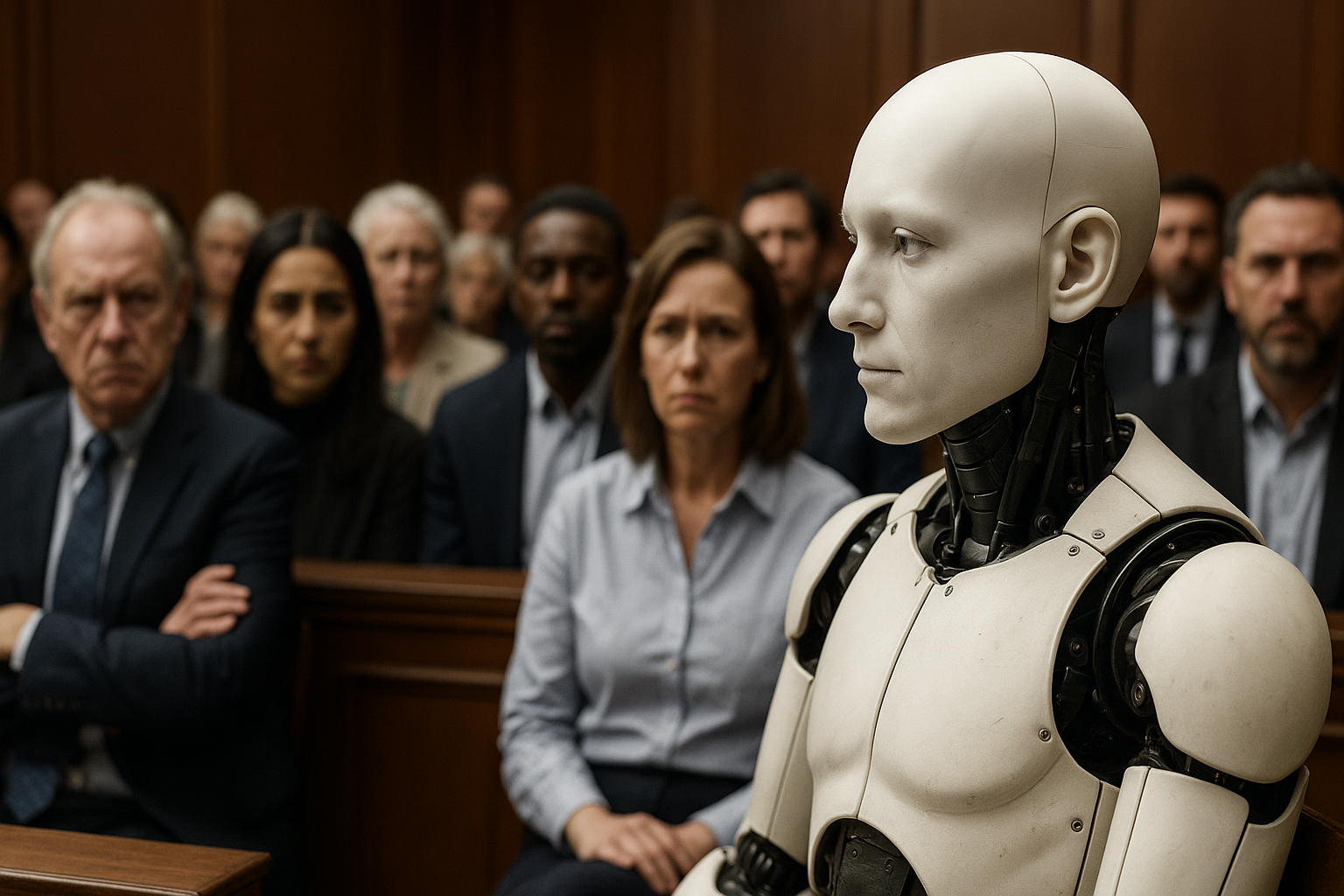

What if an algorithm could judge more morally than a human? The idea might seem unsettling—but it's increasingly real. Moral AI systems are making ethical choices more consistently and, in some cases, more convincingly than humans. But what are the implications of trusting machines with moral decisions that impact lives, laws, and justice?

In this article, you'll learn:

- How advanced models like Centaur simulate human and moral decisions

- Why studies show AI outperforming humans in ethical judgment

- The emerging responsibility and governance challenges

- A lens on what society must decide next

Why This Question Demands Attention

AI is stepping into moral arenas—from medical diagnoses to sentencing—making decisions with profound ethical weight. Studies now suggest AI can outperform humans in moral reasoning tests. But delegating moral power to machines raises sharp questions: Who is accountable? Can we trust opaque algorithms? Legal systems and policymakers are racing to catch up—but frameworks are still fragmentary.

The Centaur Model: Thinking Like Humans

A breakthrough from Helmholtz Munich birthed "Centaur": a cognitive AI trained on over 10 million decisions from psychological experiments. It imitates human choice patterns and reaction times—even in novel scenarios—making it a candidate benchmark for moral decision ability.

Centaur isn't just regurgitating known patterns. In novel tasks, it predicts human responses with remarkable precision—sometimes better than standard cognitive models. In moral situations, an AI that acts like humans could be perceived as more trustworthy or even morally superior.

AI Beating Humans at Moral Judgments

A notable study, "AI Outperforms Humans in Moral Judgments," found participants rated AI-generated moral advice as more virtuous, intelligent, and trustworthy than human responses in a blind test—essentially a moral Turing test.

Participants consistently preferred AI responses. They often believed AI offered morally superior guidance—even though the source was unknown. The study warns: such trust can lead to uncritical adoption of AI moral directives, whether accurate or not.

Public Trust Shifts Toward Machines

Complementary research at Georgia State University recorded participants preferring AI answers in moral dilemmas.

"A computer could technically pass a moral Turing test…it performed too well."

This signals a seismic shift in public trust—machines may soon be accepted as moral authorities.

The Bias Behind Inaction

Not all is rosy. Researchers at UCL found that large language models (GPT, Claude, LLaMA) tend toward inaction in moral dilemmas—often defaulting to "no" to avoid risk. While safer in avoiding harm, this passive approach has ethical drawbacks: sometimes action is necessary to prevent greater harm.

These models also inherit biases and blind spots. Their moral inertia might reflect cautious design, but it doesn't absolve them from making flawed or ethically shallow recommendations.

Human-Machine Morality: Better Together?

A Nature analysis (2024) showed that pure-AI teams often outperform humans in isolated tasks. However, combining humans and AI doesn’t always yield improvements—raising questions about when and why AI should intervene in moral decision-making.

If AI is already making better moral calls on its own, human oversight may sometimes be more of a hindrance than a benefit—unless clearly defined roles and interactions are established.

Can We Really Code Ethics?

Philosopher Vincent Conitzer probes: “Why should we ever automate moral decision making?” He contends morality is too abstract to be reduced to algorithms, warning of shallow or rigid moral systems.

The Delphi experiment at Allen Institute reinforces this: teaching AI morality via scraped internet dilemmas led to basic competence—but also inconsistent and biased results. It spotlights the difficulty of encoding universal moral values while navigating cultural variance and nuance.

The Accountability Vacuum

Accountability becomes murky with moral AI:

- Developer or platform owner

- Deploying organization (business, court, hospital)

- The AI itself—should it bear any legal status?

Regulation is lagging. The EU's AI Act proposes transparency and explainability, but lacks concrete binding frameworks. Without clarity, responsibility may fall unfairly to the weakest party—often individual operators or developers.

Why This Matters

Trust vs. Risk

As AI becomes more trusted in moral domains, errors become more hazardous. Blind reliance may amplify biases or faults under moral umbrellas.

Power and Norm-Setting

Who defines algorithmic morality controls public standards. Delegating moral judgment to AI reshapes influence over societal norms, ethics, and legal enforcement.

Regulatory Imperatives

Global standards are emerging—especially in the EU—with demands for explainability and liability rules. But much is still at risk of legal fragmentation and loopholes.

What Comes Next

- Transparency by Design: Moral AI systems need built‑in explainability to clarify how they reach decisions—comparable to DSGVO’s right to explanation.

- Clear Accountability Structures: Define legal responsibility: who pays when AI errs morally?

- Context‑Specific Moral Training: Generic datasets like Delphi aren’t enough. Ethics decisions require context-rich, localized moral frameworks with diverse representation.

- Hybrid Oversight Systems: Rather than replacing judges or doctors, moral AI should augment human decision-making—with clear thresholds for when authority shifts from machine to human.

- Global Regulatory Coordination: Without aligned frameworks, we risk regulatory arbitrage and inconsistent applications of moral AI across borders.

Conclusion

AI has begun to outshine humans in moral reasoning—in consistency, fairness, and clarity. But with this leap comes heavier responsibility.

- Who touches power and bears blame?

- How do we make decisions transparent and fair?

- Should machines fully, partially, or not at all replace human moral arbiters?

It’s time to act. We need technical standards, legal infrastructure, and ethical codes that ensure moral AI supports human society—not supplants it. The future of justice demands it.

If you’re building, deploying, or regulating AI systems in moral domains, join the conversation. Shape frameworks that balance machine consistency with human judgment. Sign up for the Vastkind newsletter to stay informed and engaged with ethical AI developments.