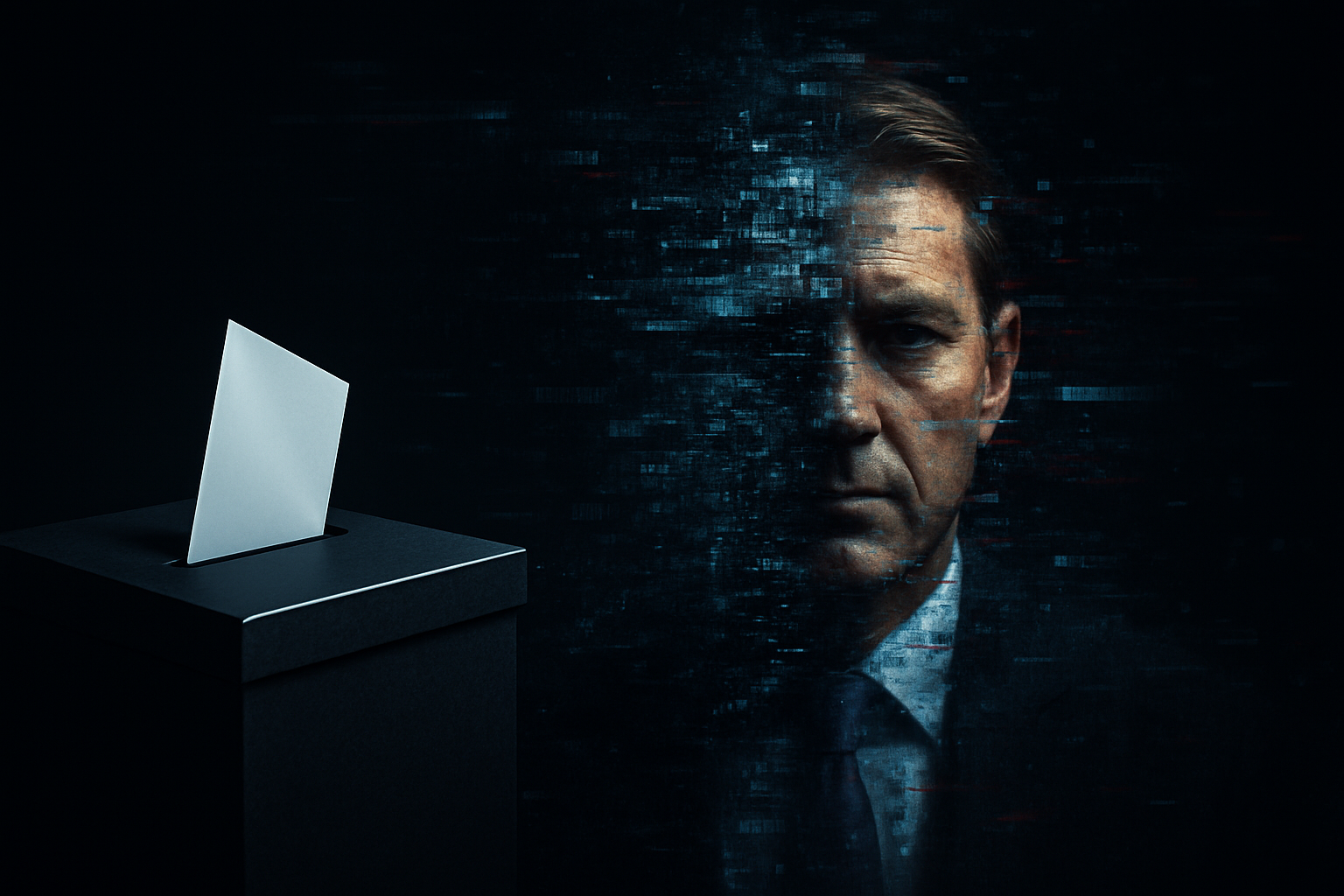

In the biggest global election cycle in decades—64 countries voting in 2024–25—a parallel contest is raging: the fight to decide what is real. The main keyword “Deepfakes” now headlines government hearings, headlines, and encrypted group chats alike. From AI‑voiced robocalls telling New Hampshire Democrats to “stay home” to resurrected Indian politicians pitching campaign jingles from beyond the grave, generative models have dragged democracy into an uncanny valley.

“When anyone can make a president say anything, sovereignty starts at the level of perception.” — Lawrence Norden, Brennan Center

This piece unpacks who gets hit, how the fractures cut across culture, and why the next 18 months will decide whether liberal societies can still agree on a shared baseline of facts.

The New Physics of Political Reality

Cheap, Fast, Everywhere

- Voice fakes: Thirty seconds of a public speech is all a mid‑tier model needs to mass‑produce convincing robocalls.

- Video resurrection: Posthumous cameos of beloved leaders hack nostalgia and short‑circuit critical thinking.

- Zero‑day synths: Enterprise‑grade generators iterate weekly, outpacing every published detection benchmark.

A 2025 Stanford AI Index report warns that generative media models now dominate public AI disclosures, dwarfing classical vision and language research.

The Double‑False‑Claim Effect

Politicians now weaponize the uncertainty itself. A scandalous—but authentic—clip can be dismissed as “just another deepfake,” leaving voters paralyzed. The epistemic fog benefits the loudest actors, not the most truthful.

Who Gets Hurt—and How

- Voters lose confidence in their own senses, leading to apathetic abstention that skews turnout.

- Journalists burn cycles verifying footage instead of investigating policy.

- Marginalized communities face micro‑targeted suppression via WhatsApp audio fakes in local dialects.

- Small campaigns without deep war‑rooms struggle to rebut viral fabrications in the critical first 24 hours.

A USC–arXiv study on “sleeper social bots” found AI‑driven accounts can behave humanly for months before unleashing coordinated disinformation, making detection “painfully reactive.”

The Detection Arms Race

Forensic Start‑ups Go Mainstream

- Reality Defender and Sensity AI sell APIs that scan platforms in real time—yet none hit > 99 % across new generator architectures.

- Intel FakeCatcher uses subtle blood‑flow signals (PPG) to spot synthetic skin tones, delivering answers in milliseconds.

- Truepic’s C2PA “content credentials” aim to flip the problem: authenticate what’s real instead of whack‑a‑mole debunking.

Authenticity by default—embedding cryptographic signatures at the sensor level—is emerging as the only scalable fix.

Still, the AI Index shows detection accuracy lagging several model generations behind creation capability, a gap that widens every quarter.

For the technical blueprint, see the C2PA specification and Intel’s research note on physiological‑based deepfake detection.

Patchwork Law, Global Stakes

United States

- FCC bans AI‑generated voices in robocalls; fines stack up but remain post‑incident.

- 24 states adopt deepfake election laws—California forces takedown within 48 hours, facing First‑Amendment challenges.

European Union

- The EU Artificial Intelligence Act (2025) demands visible “synthetic” labels and empowers regulators under the Digital Services Act to fine Very Large Online Platforms that fail to comply.

Voluntary Accords

The AI Elections Accord binds 27 tech giants to watermarking promises—yet NGO audits cite “meaningful implementation gaps.” Regulation runs slower than code commits.

Culture, Ethics, and the Human Psyche

Deepfakes tap primal biases: we trust faces, voices, and shared memories. When those cues implode, social capital erodes, and communities retreat into niche “reality silos.” The psychological toll is subtle—hyper‑vigilance, collective fatigue, and a spiraling sense that nothing can be verified anyway.

When truth feels optional, civic engagement becomes irrational.

Why This Matters

Deepfakes are not a tech‑industry side quest; they are a stress‑test for liberal democracy itself. If societies cannot anchor shared facts during elections, every downstream debate—climate, health, security—fractures. The next two years will determine whether authenticity becomes a protected public good or a nostalgic relic of the pre‑AI era.

Toward a Resilient 2030

- Hardware‑rooted authenticity: Phone cameras and streaming APIs must ship with C2PA‑style default signing.

- 24‑hour judicial fast lanes: Courts need emergency injunction protocols faster than a news cycle.

- Civic inoculation: Media‑literacy campaigns should teach citizens to expect, spot, and report fakes—before the ballots drop.

- Open science benchmarking: Independent labs must stress‑test detectors against the very latest models, with transparent leaderboards.

For a deeper look at AI governance, revisit Vastkind’s guide to responsible innovation frameworks and our explainer on building trust layers into digital ecosystems.

Deepfakes are the canary in democracy’s coal mine. We can still write the rules of authenticity—but only if we act before disbelief becomes ambient noise. Regulators, technologists, and citizens share custody of reality now.